ArangoDB is a notable NoSQL database system that may be of interest to security and other informatics specialists that need to scale document collections (JSON), graphs, or name/value pairs–any or all in one implementation. The commercial entity behind Enterprise ArangoDB support is a German company out of Cologne that is currently looking to re-locate it’s HQ in the US.

ArangoDB is parallizable via sharding much in the model of Mongo; however an interesting feature is the ability to base shards on communities in the case of graphs–hence minimizing network overhead when following paths, looking for neighbors, etc. To my knowledge one has to actually come up with the community scheme and then re-arrange the shards accordingly–it is not dynamic.

Given the past discussion of graphing with networkx, I thought it may be interesting to think about how to persist data out-of-memory with a graphing database such as ArangoDB. I attended a presentation on ArangoDB at some point and went on to dabble with an ArangoDB instance to learn how to load graph data formatted in the Graph Modeling Language (GML).

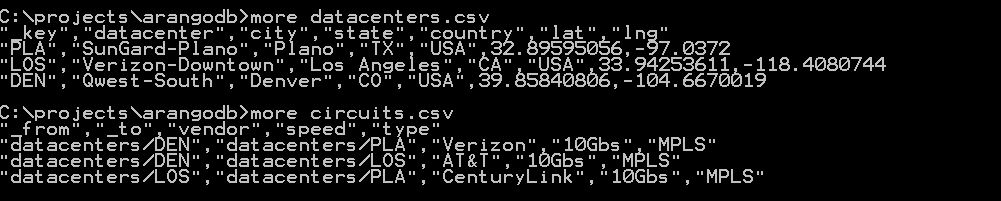

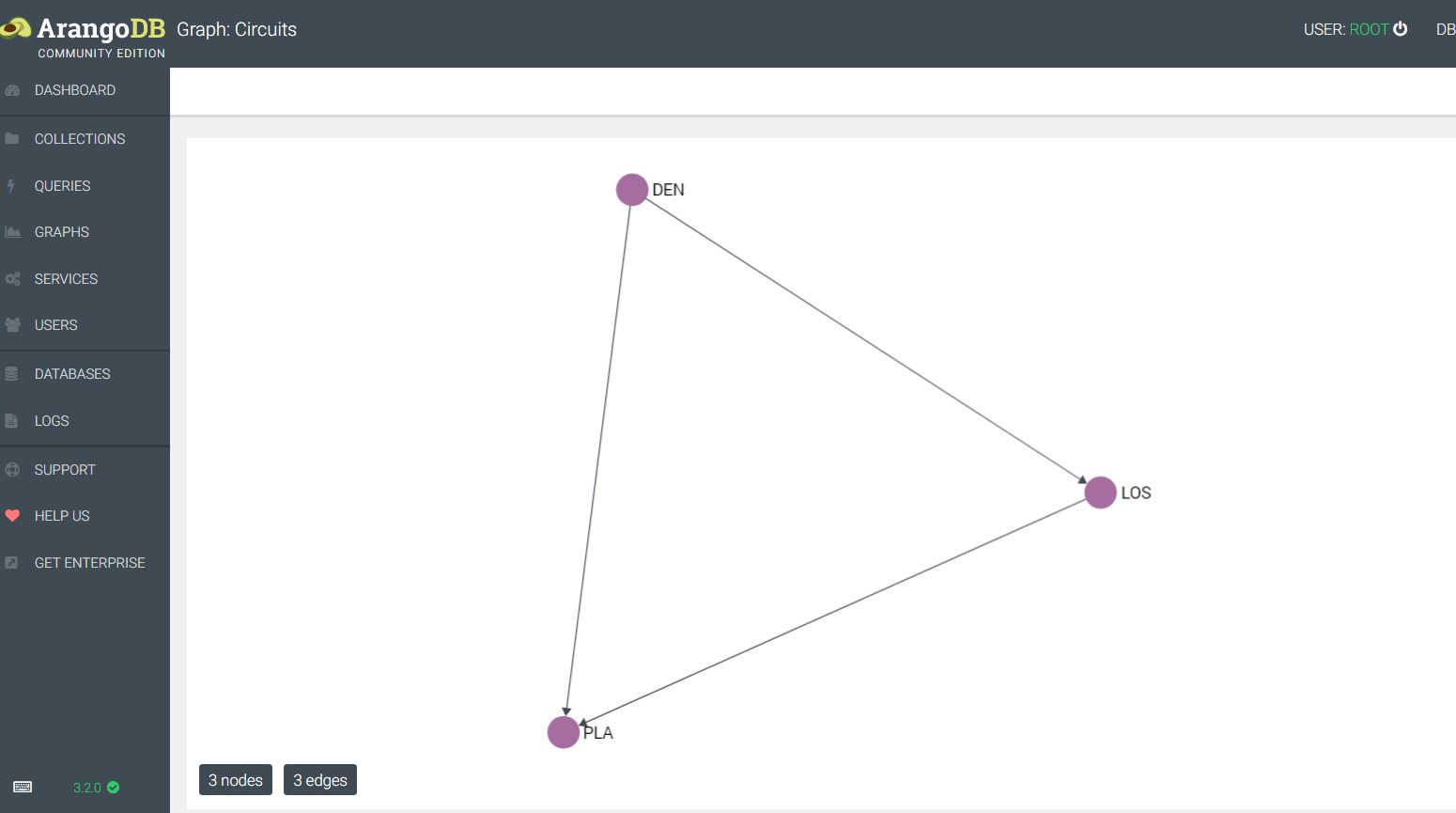

Arangodb has some nice sample data and tutorials to get users started setting up collections and querying with their custom SQL-like query language called AQL. For a quick initial demo I created a very simple collection of datacenters and circuits:

This allows for the import of the circuits into an edge collection, and the datacenters into a vertex collection. At this point a graph can be created and queried along the edges. If we wanted to see which datacenters were available via private circuits and the associated shortest path, this would be doable within this scheme much more efficiently versus a traditional RDBMS. Next I could put detailed circuit information and contacts into an associated JSON document and be able to pull up information along the way–e.g. I could query the path router by router. ArangoDB implements edges and vertices as JSON documents, with a from and to relationship in the case of edges.

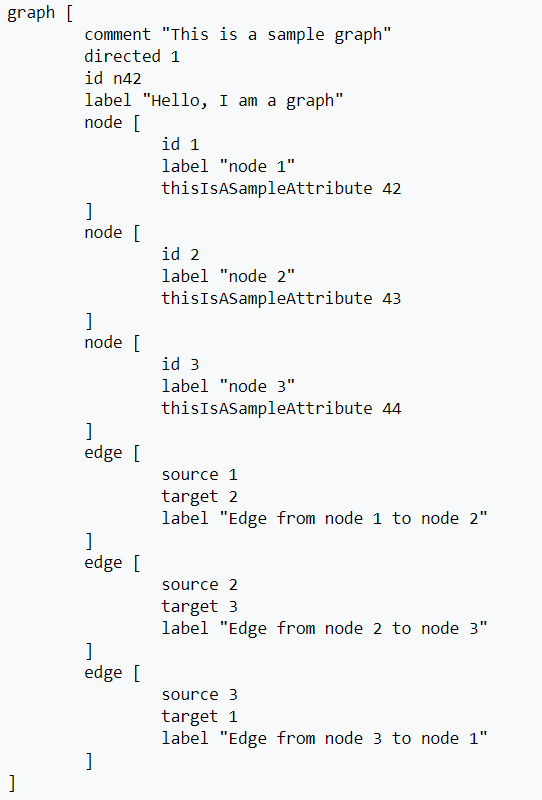

Referring back to the Autonomous Systems routing organization data that was discussed in this blog recently, we see that this used a GML dataset and was loaded via Python networkx. What if we wanted to load the GML into ArangoDB? To my knowledge there is no native support for this: one has to break the dataset into nodes and edges and then import as collections. It’s fairly simple to condition a GML file for this-see the routines in cond_gml.py here. Looking at the Wikipedia example below it is easy to see that GML is straightforward to parse into CSV.

The repository contains routines to do this parsing for you: this simply consists of breaking it out into edges and nodes. Disclaimer: I need to verify and test with more GML dataset examples to validate that this works universally, but because the logic is trivial any user should be able to modify and tweak as needed.

In examining edges, given that there is this from, to structure to each edge in ArangoDB, it gets confusing as to whether every graph is explicitly directional. A graph can be queried using syntax that specifies Inbound, Outbound, or Any–thus the graphs can be interpreted and queried as undirected.

def edgeparser(ary):

# create a source and target list, then push them into a result list of tuplets

result,source,target = [],[],[]

for item in ary:

if 'source' in item:

num = re.findall('\d+',item)

# use join and int to get rid of brackets and quotes

source.append(int(''.join(num)))

if 'target' in item:

num = re.findall('\d+',item)

target.append(int(''.join(num)))

for i in range(len(source)):

result.append((source[i],target[i]))

return result

##############################################################

# name: nodeparser.py

# purpose: utility routine to harvest nodes from GML

# input: list ary # ary contains the raw data

# output: results # list of tuplets is a list of tuplets # (<node id>, <node label>

##############################################################

def nodeparser(ary):

# create a source and target list, then push them into a result list of tuplets

result,ident,label = [],[],[]

for item in ary:

if 'id' in item:

num = re.findall('\d+',item)

# use join and int to get rid of brackets and quotes

ident.append(int(''.join(num)))

if 'label' in item: # label is the actual node name

num = re.findall('\d+',item)

label.append(int(''.join(num)))

for i in range(len(label)):

result.append((ident[i],label[i]))

return result

Once we have the nodes and the edges, we can input as CSV using the ArangoDB console GUI or the ArangoDBsh.exe command shell. However, it is a more useful exercise to learn how using Python. There are two popular Python drivers for ArangoDB–I chose to work with pyArango simply because the ArangoDB.com site uses that module in their tutorials and documentation (I believe the creator of pyArango was a contractor with ArangodB commercial). Feel free to switch if the other module turns out to be superior or simpler.

It’s not exactly simple to load graphs with the pyArango module. One needs to heed the example code and create some overhead classes. I’m unaware of much in the way of documentation for this, but here is an example I coded of implementing a tuplet loader. GML datasets can be interpreted as a pair of files containing tuplets [node labels, node ids] , [edge from, edge to]. Again, all of the code is in the repository.

#######################################################

# name: gml_loader.py

# purpose: load graphs into arangodb based on conditioned files from a GML

# need an edges.txt and a nodes.txt file - see cond-gml.py for creating these

# input: strings username, password, database (default is _system)

#

# author: adapted from pyrango example by Ray Zupancic

#######################################################

import sys

import getopt

from pyArango.connection import *

from pyArango.graph import *

from pyArango.collection import *

class AS(object):

class nodes(Collection) :

_fields = {

"name" : Field()

}

class paths(Edges) :

_fields = {

"number" : Field()

}

class as2006(Graph) :

_edgeDefinitions = (EdgeDefinition ('paths',

fromCollections = ["nodes"],

toCollections = ["nodes"]),)

_orphanedCollections = []

def __init__(self,nodes_tup,edges_tup, user, pw, db = "_system"):

self.nodes_tuples = nodes_tup

self.edges_tuples = edges_tup

self.conn = Connection(username=user, password=pw)

ndict = {}

self.db = self.conn[db]

self.nodes = self.db.createCollection(className = "nodes")

self.paths = self.db.createCollection(className ="paths")

g = self.db.createGraph(db)

for item in self.nodes_tuples:

# uncomment if you want to watch the routine load

#print("id:", item[0]," label:", item[1])

n = g.createVertex('nodes', {"name": item[1], "_key": str(item[0])})

n.save()

ndict[item[0]] = n

for item in self.edges_tuples:

print(item)

strval = str(item[0]) + "_" + str(item[1])

g.link('paths', ndict[item[0]],ndict[item[1]], {"type": 'number', "_key": strval})

#####################

# name: stub to run AS - Autonomous System Graphing Class

#####################

def main(argv):

if len(sys.argv) <3:

print('usage: gml_loader.py -d database -u <arangodb_username> -p <arangodb_password>')

sys.exit()

try:

opts, args = getopt.getopt(argv,"hd:u:p:",["username=","password="])

except getopt.GetoptError:

print('gml_loader.py -d database -u <arangodb_username> -p <arangodb_password>')

sys.exit(2)

for opt, arg in opts:

if opt == '-h':

print('gml_loader.py -d database -u <arangodb_username> -p <arangodb_password>')

sys.exit()

elif opt in ("-u", "--username"):

username = arg

elif opt in ("-p", "--password"):

password = arg

elif opt in ("-d", "--database"):

db= arg

print( 'database: ', db)

print( 'username: ', username)

print( 'password: ', password[0:2] + '*****')

# read in the paths and nodes as tuplets

with open('edges.txt') as f:

edges_col = []

for line in f:

#strip new-line, split into a tuple, and append as integer rather than string

val1,val2 = line.rstrip('\n').split(',')

edges_col.append((int(val1),int(val2)))

with open('nodes.txt') as f:

nodes_col = []

for line in f:

#strip new-line, split into a tuple, and append as integer rather than string

val1,val2 = line.rstrip('\n').split(',')

nodes_col.append((int(val1),int(val2)))

AS(nodes_col, edges_col, username, password, db)

if __name__ == "__main__":

main(sys.argv[1:])

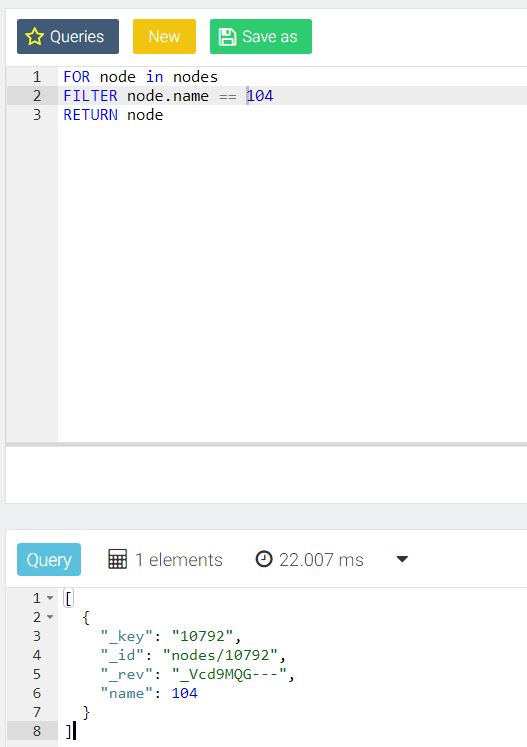

Once you have the AS dataset loaded, you can run queries on it using AQL or embed AQL in pyArango. Here are some examples:

#######################################################

# name: get_shortest_path

# purpose: get shortest path between two nodes

# input: conn_object: db; strings: node1, node2

# ouput: path

#

#######################################################

def get_shortest_path(db, n1, n2):

aql = "FOR e, v IN ANY SHORTEST_PATH 'nodes/{}' TO 'nodes/{}' GRAPH 'as2006' RETURN [e, v]".format(n1,n2)

return db.AQLQuery(aql, rawResults=True, batchSize=100)

If we want to find the shortest path between Univ. of Colo Boulder and Indiana Univ. we just feed in their AS #’s.

number of edges: 48436

number of nodes: 22963

key of node 400: 10836

Shortest path between IU and CU: 5

Note that I said shortest path in terms of AS hops and not necessarily the most optimal–this is simply a graph query demonstration, and, also, this is data from 2006 used to demonstrate graphing concepts. Because of the fact that the vertices are JSON docs and could hold meta-information about connectivity, it would possible to embed enough data to find optimal paths: e.g. break the AS’s down into a circuit-by-circuit, router-by-router network as was being hinted at with the datacenter/circuit relationship introduced above.

The ArangoDB console GUI tool is very nice for ad hoc queries as demonstrated below:

Summing up, this is very manageable and free software that can complement or even replace Mongo in some instances, and may prove invaluable to graph-oriented x-informatics projects.